At first glance, Dopple AI looks like another entry in the fast-growing AI companion space. It offers fictional characters, customizable personalities, and open-ended conversations that promise fewer restrictions than mainstream alternatives. But the reality is more complicated.

Dopple AI sits at a crossroads between creative freedom, technical ambition, and growing user frustration. For some, it represents one of the most immersive roleplay experiences currently available. For others, it feels unstable, poorly moderated, and increasingly unreliable. Understanding Dopple AI requires moving past surface-level feature lists and looking at how the platform actually behaves over time.

What Dopple AI Is Really Built For

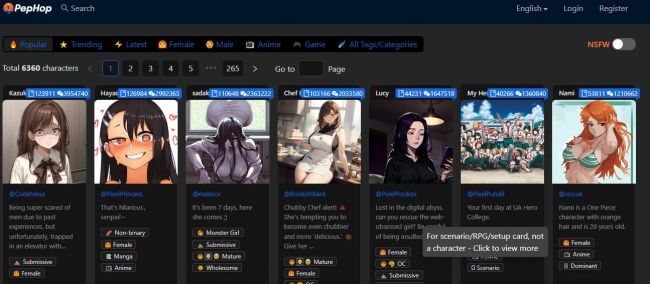

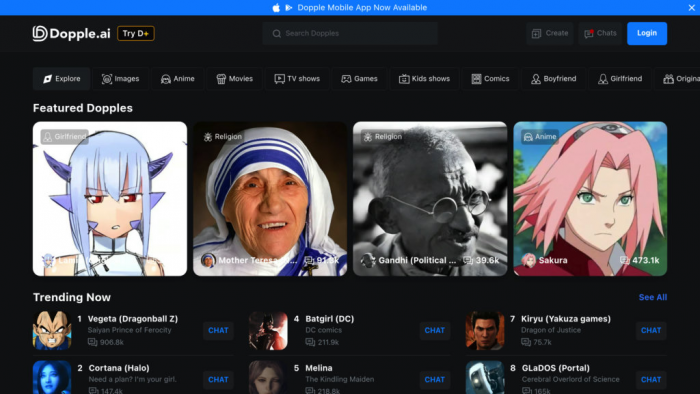

Dopple AI is not designed as a productivity assistant or a mental health companion. Its core purpose is entertainment through character simulation. Users interact with AI personas called Dopples, which can be based on anime characters, fictional universes, historical figures, or entirely original creations.

Unlike heavily filtered platforms, Dopple emphasizes uncensored conversation, especially for roleplay and storytelling. This positioning is deliberate. It appeals to users who felt constrained by stricter moderation on competitors like Character.AI or Replika. The result is a platform that prioritizes imagination and immersion over guardrails.

That choice shapes everything else, including its technical architecture, community behavior, and controversies.

Company Background and Technical Direction

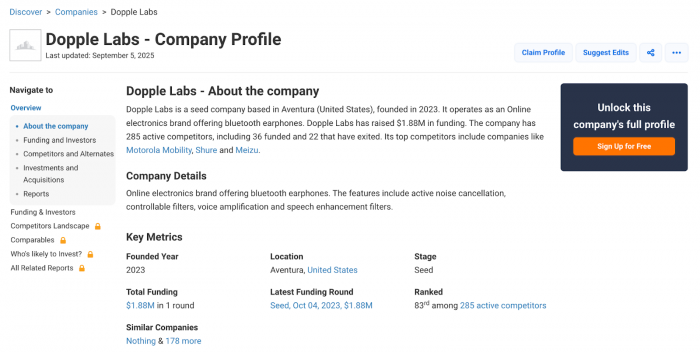

Dopple AI is operated by Dopple Labs Inc., a privately held company founded in 2023 with headquarters in Miami and additional operations in Palo Alto. The team is relatively small, reportedly between 11 and 50 employees, which matters when evaluating platform stability and response times to issues.

On the technical side, Dopple relies on fine-tuned Llama-based large language models optimized for conversational flow rather than factual accuracy. The system uses Retrieval Augmented Generation to store long-term memory, embedding summaries of past interactions into a vector database. This allows Dopples to remember relationships and events beyond a single chat session.

In theory, this memory system is one of Dopple’s strongest differentiators. In practice, user feedback suggests that memory quality can vary dramatically depending on server load, updates, and character configuration.

The Dopple Ecosystem and Why Scale Became a Problem

One of Dopple AI’s most distinctive traits is the sheer volume of community-created characters. Thousands of Dopples exist across categories ranging from anime and games to philosophers, religion, and original roleplay scenarios. Some characters have accumulated millions of messages, signaling deep engagement.

This scale creates two opposing forces.

On one hand, it fosters creativity and diversity. Users can find niche characters that would never exist on more controlled platforms. On the other hand, it introduces quality instability. There is no consistent vetting process for third-party Dopples, which means personality coherence, safety boundaries, and writing quality vary wildly.

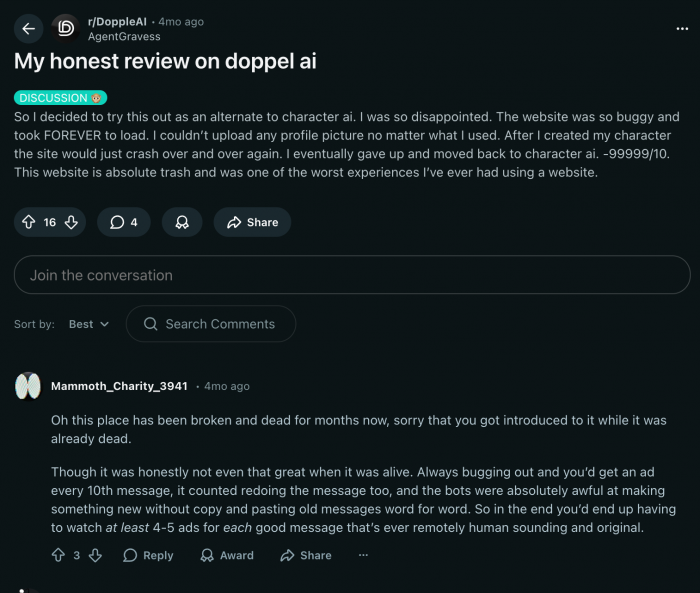

As the platform grew, many users reported that AI responses became shorter, repetitive, or prone to copying user input verbatim. Reddit threads frequently describe periods where Dopple felt “dead” or “broken,” followed by partial fixes and regressions.

This cycle has damaged trust, especially among long-term users.

Free vs Paid Experience and Why Pricing Became Controversial

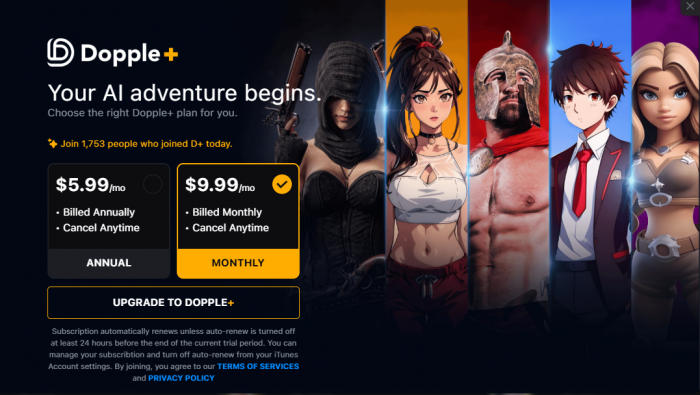

Dopple AI offers a free tier that technically allows unlimited chatting, but in practice, free users encounter frequent ads, pop-ups, and subscription prompts. Over time, additional restrictions were introduced, including daily chat limits and reduced response quality, which many users interpreted as pressure to upgrade.

The Dopple+ subscription costs around $9.99 per month, with discounts for annual billing. Premium features include an ad-free experience, enhanced visuals, exclusive Dopples, NSFW access, and early feature releases.

The controversy is not the price itself but how the transition was handled. Multiple Trustpilot and Reddit reviews describe billing confusion, ignored refund requests, and sudden changes to what free users could access. These issues created a perception that monetization decisions were reactive rather than transparent.

User Experience: Why Some Still Stay

Despite persistent complaints, Dopple AI has not collapsed. Many users continue to use it daily, and that endurance says something important.

For roleplay enthusiasts, Dopple still offers a level of creative freedom that competitors rarely match. Characters can engage in long-form storytelling, complex emotional arcs, and scenarios that would trigger moderation elsewhere. For users invested in custom characters with extensive backstories, migrating to another platform is not trivial.

This creates a form of platform lock-in that is emotional rather than technical.

Some users openly acknowledge the bugs, instability, and ethical concerns, yet continue using Dopple because it remains familiar and expressive in ways alternatives are not.

Risks, Ethics, and the Cost of Being Unfiltered

Dopple’s uncensored design is both its appeal and its greatest liability.

Because there is minimal pre-screening of user-generated characters, the platform can expose users to hate speech, sexual violence simulations, or disturbing roleplay scenarios. While terms of service prohibit illegal and harmful content, enforcement is inconsistent, and moderation is largely reactive.

There are also privacy implications. Dopple stores and analyzes chat data to improve its models, granting the company broad rights over user conversations. For a platform built on intimate, emotionally charged interactions, this raises legitimate concerns, especially for users unsure who ultimately has access to their data.

Some reviewers describe psychological discomfort after extended use, particularly when roleplay blurs into dependency. Dopple does not position itself as a mental health tool, yet the line can become unclear for vulnerable users.

How Dopple Compares Without the Hype

Compared to Character.AI, Dopple offers greater freedom but less stability. Compared to Replika, it sacrifices emotional safety for creative range. Compared to Janitor AI, it offers a polished mobile app but struggles with consistency.

No comparison is definitive because Dopple is not trying to solve the same problem. It is not about wellness or productivity. It is about narrative immersion, even when that immersion becomes messy.

Why Dopple AI Matters Even If It Fails

Dopple AI represents a broader experiment in AI culture. It tests how much freedom users want versus how much structure they need. It shows what happens when creative platforms scale faster than their governance models.

Whether Dopple thrives, stabilizes, or eventually shuts down, its trajectory offers a clear lesson. Unfiltered AI is not just a technical challenge. It is a social one. The friction users experience is not accidental. It is the cost of operating at the edges of acceptable content without fully resolving the responsibilities that come with it.

For users, Dopple AI is neither a clear recommendation nor a cautionary tale. It is a reminder to understand what you are trading for freedom, and whether that trade still feels worth it.

Post Comments

Be the first to post comment!

Table of Content

- What Dopple AI Is Really Built For

- Company Background and Technical Direction

- The Dopple Ecosystem and Why Scale Became a Problem

- Free vs Paid Experience and Why Pricing Became Controversial

- User Experience: Why Some Still Stay

- Risks, Ethics, and the Cost of Being Unfiltered

- How Dopple Compares Without the Hype

- Why Dopple AI Matters Even If It Fails

- Comments

- Related Articles