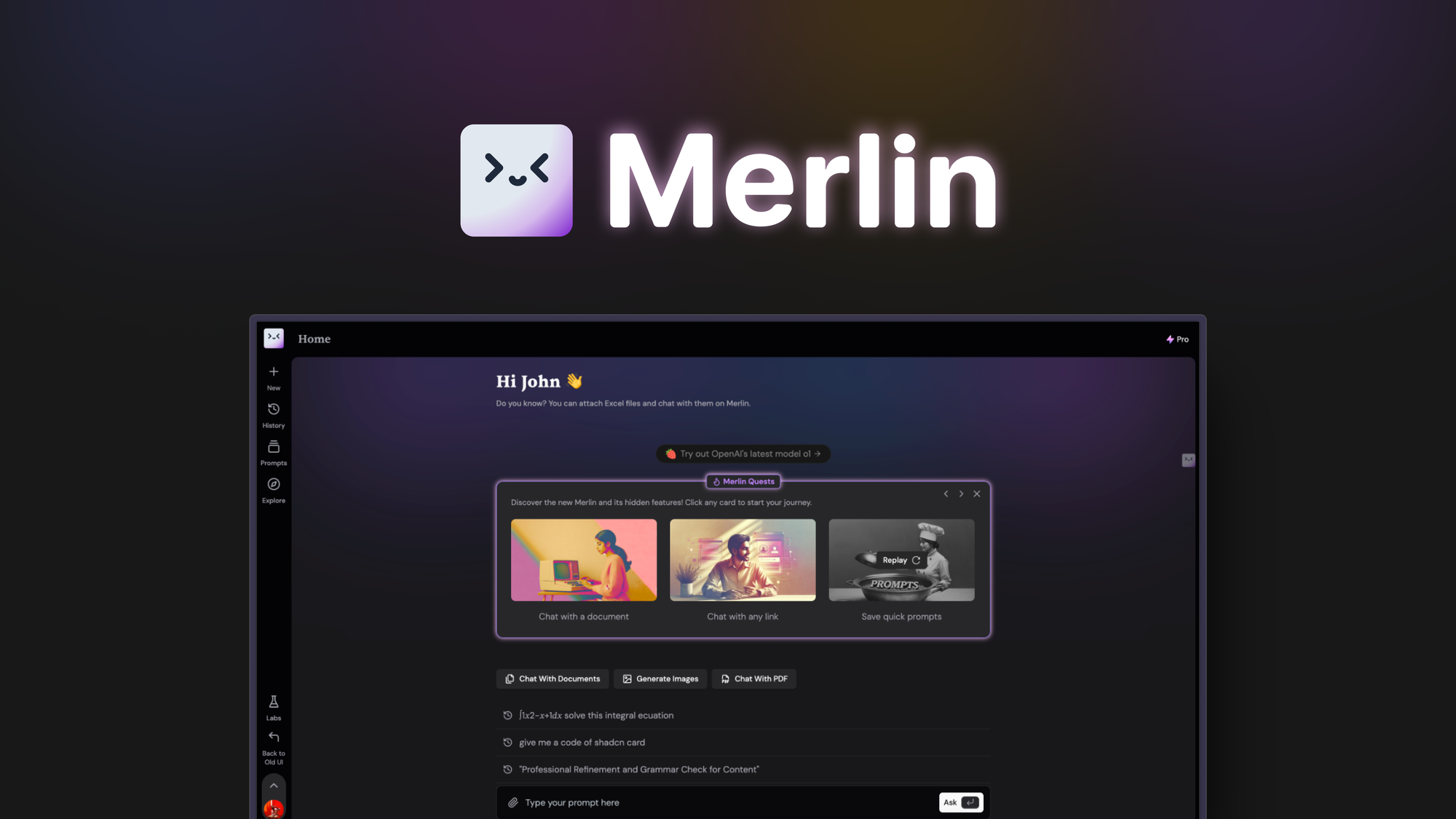

Merlin AI is often installed casually, usually as a browser extension someone wants to “try out” for summarizing an article or speeding up an email. At first glance, it feels like a practical addition: lightweight, easy to access, and always available inside the browser.

For many users, that initial impression is positive. Merlin AI doesn’t demand setup time or a learning curve. It quietly integrates into everyday browsing and writing tasks, which is exactly what makes it appealing. However, as usage deepens,especially for paying users,some noticeable limitations begin to surface.

Merlin AI: What It Is Meant to Be vs. How It Feels in Practice

Merlin AI is designed as a browser-based AI assistant that works directly inside websites and web apps. Instead of acting as a standalone chatbot, it overlays AI assistance on top of existing workflows like reading articles, drafting emails, or organizing text.

In theory, this approach is efficient. Users can highlight text, invoke AI actions, and move on without switching tabs. For light, quick tasks, Merlin AI generally delivers on that promise.

In practice, however, experienced users,particularly those familiar with official ChatGPT or Claude platforms,often notice a gap between expectation and output. While Merlin claims access to advanced models such as GPT-4o, many users report that responses feel shorter, more constrained, and less capable than what the same models provide on their official platforms.

Evaluation: How Merlin AI Performs in Real-World Use

Based on both general usage and specific user complaints, Merlin AI performs unevenly depending on task complexity:

| Area | Observed Performance | Rating |

| Ease of Entry | Extremely easy to install and start using | ⭐⭐⭐⭐⭐ |

| Best Use Case | Short summaries, simple rewrites, quick assistance | ⭐⭐⭐⭐☆ |

| Output Length | Frequently feels limited, even on paid plans | ⭐⭐☆☆☆ |

| Context Retention | Can lose instructions during longer tasks | ⭐⭐☆☆☆ |

| Instruction Accuracy | Sometimes ignores explicit user commands | ⭐⭐☆☆☆ |

| Speed | Fast responses with minimal lag | ⭐⭐⭐⭐☆ |

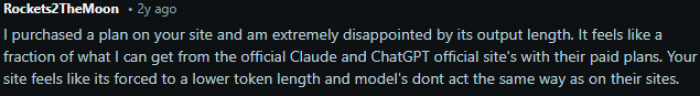

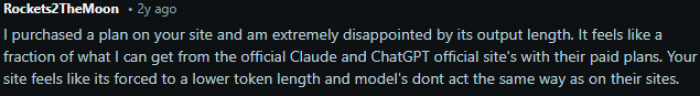

Where Problems Start: Output Length and Token Limits

One of the most common complaints from paying users is that Merlin AI’s responses feel artificially shortened. Users who upgraded expecting output comparable to official ChatGPT or Claude plans report that Merlin delivers only a fraction of the length and depth.

This becomes particularly frustrating for tasks such as:

- Writing long posts or articles

- Organizing structured content

- Expanding detailed explanations

For these use cases, Merlin AI often requires repeated prompts or external tools, which undermines its convenience.

Context Drift: When Instructions Quietly Fall Apart

One of the more frustrating issues reported by users involves context instability. In practical terms, this means Merlin AI sometimes fails to consistently follow previously given instructions within the same task.

For example, a user may clearly instruct the system not to translate a block of text, only to see the output automatically translated anyway. In other cases, formatting constraints, tone preferences, or structural requirements are ignored midway through a longer response. The AI may begin correctly, then gradually shift style, language, or structure without clear cause.

This pattern creates what users describe as “instruction fatigue.” Instead of accelerating workflow, the tool requires constant re-correction. Over time, this reduces trust in the output.

The impact becomes more noticeable in workflows that depend on precision:

- Content organization tasks, where headings, bullet structures, and formatting rules must remain consistent

- Multilingual workflows, where translation boundaries and language separation are critical

- Structured editing environments, such as legal drafting, academic editing, or policy documentation

When instructions do not persist across an interaction, users spend additional time supervising rather than producing. The efficiency advantage that AI tools promise becomes partially offset by monitoring overhead.

In high-stakes or time-sensitive scenarios, this inconsistency can feel less like a minor bug and more like a reliability concern.

Model Behavior Differences: When It Feels Like a “Lite” Version

Another recurring criticism involves model behavior consistency. Merlin AI allows users to select premium models such as GPT-4o or Claude. However, some experienced users report that the outputs do not always resemble the expected performance of those official model environments.

The differences described include:

- Responses that feel shorter or more constrained

- Less nuanced reasoning in complex prompts

- Reduced coherence in long-form answers

- More generic phrasing compared to direct platform use

For casual users generating quick summaries or short answers, this difference may not be obvious. But for advanced users familiar with how GPT-4o or Claude typically respond in their native interfaces, subtle behavioral shifts become noticeable.

This creates perception gaps.

When users pay for access to specific models, they expect parity in output quality and reasoning depth. If the responses feel simplified or filtered differently, it can create uncertainty about how the model is being routed, processed, or constrained within the platform.

The concern is not necessarily that Merlin AI is malfunctioning. Rather, it is that the integration layer may alter behavior in ways that reduce fidelity to the original model experience.

For productivity tools positioned as premium AI aggregators, that distinction matters. Power users often choose tools based on the expectation of model authenticity. If the behavior diverges too far from the official environment, trust can erode.

Pros and Cons: A More Realistic Breakdown

Pros

- Seamless browser integration

Merlin AI fits naturally into everyday browsing without disrupting workflow. - Very low learning curve

No setup, no dashboards,just install and use. - Helpful for lightweight tasks

Short summaries, quick rewrites, and basic drafting work reasonably well.

Cons

- Noticeably limited output length

Even premium users report restrictive responses. - Poor context retention in longer sessions

Instructions can be ignored or forgotten. - Inconsistent behavior compared to official AI platforms

Creates unmet expectations for experienced users. - Not dependable for serious writing or organization

Complex tasks often require switching tools.

Final Verdict: Who Merlin AI Is (and Isn’t) For

Merlin AI works best as a casual, convenience-focused assistant for users who want quick help with reading and writing directly in their browser. For light tasks, it can genuinely save time.

However, based on real user feedback, Merlin AI is not ideal for power users, professional writers, or anyone expecting the full capabilities of official ChatGPT or Claude models, especially on paid plans. Output limits, context issues, and behavioral inconsistencies hold it back from being a dependable primary AI tool.

In short, Merlin AI is useful when expectations are modest. But for users seeking depth, consistency, and long-form reliability, it may fall short.

Post Comments

Be the first to post comment!

Table of Content

- Merlin AI: What It Is Meant to Be vs. How It Feels in Practice

- Evaluation: How Merlin AI Performs in Real-World Use

- Where Problems Start: Output Length and Token Limits

- Context Drift: When Instructions Quietly Fall Apart

- Pros and Cons: A More Realistic Breakdown

- Final Verdict: Who Merlin AI Is (and Isn’t) For