1) Maxim AI — Full-Stack Prompt & Evaluation Platform

1. Primary Purpose-

All-in-one prompt engineering + experimentation + evaluation + observability suite.

2. Core Features-

● Playground++ with versioning & metadata

● A/B tests, canary prompt rollouts

● Cross-model side-by-side prompt comparisons

● Bulk experimentation with variables & model combinations

● Integrated analytics (latency, cost, quality)

3. Use Cases & Workflows-

Example: Product team A/B tests onboarding prompts across 5 LLMs and compares throughput, user satisfaction, and cost impacts — all from one UI.

4. Metrics / Performance Data-

Teams report faster iteration cycles by eliminating manual comparison spreadsheets and separate tooling — reducing time to stable prompt from weeks to days.

5. Strengths & Weaknesses-

Pros: Full lifecycle support, rich analytics, cross-model evaluation

Cons: Can be too heavy for solo developers or simple use cases

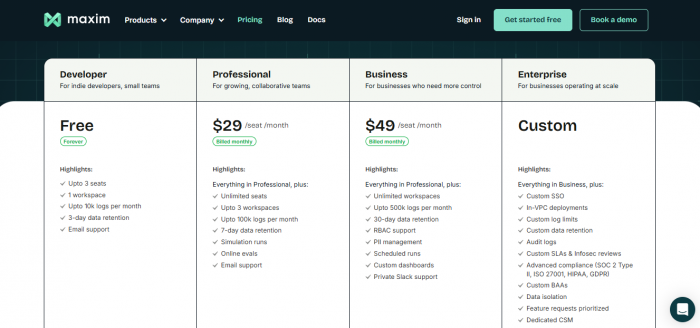

6. Pricing & Licensing-

Provides plans for free to developers, for $29/month to professionals, for $49 to business and also provides customs plans to enterprises.

7. Integration Ecosystem-

Supports OpenAI, Anthropic, Bedrock, Vertex AI, and plug-ins for RAG and vector dbs.

8. Who Swears By It-

AI/ML engineering teams in mid to large SaaS and platform companies.

9. Expert Quotes-

Industry forums cite Maxim as one of the “most comprehensive stacks for prompt experimentation at scale.”

Verdict-

Best all-in-one platform for teams that treat prompts like production code.

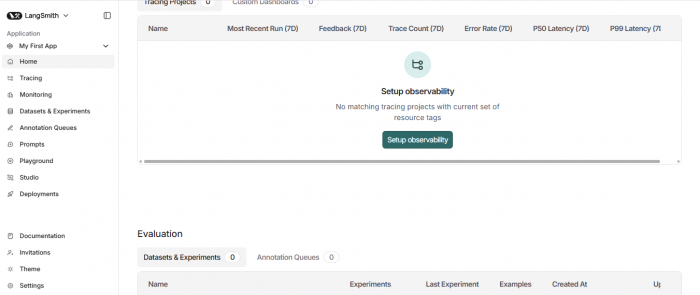

2) LangSmith — Debugging, Tracing & Evaluation Deep Dive

1. Primary Purpose-

Prompt debugging, execution tracing, versioning, and evaluation.

2. Core Features-

● Prompt Hub with version controls

● Playground with multi-turn context

● Execution trace records (inputs → outputs → tokens)

● Dataset-based test runs with automated metrics

3. Use Cases-

Engineers build complex LangChain workflows and use LangSmith to find where prompt changes break logic.

4. Metrics / Performance-

Teams report faster bug resolution and drift detection in prompt chains.

5. Strengths & Weaknesses-

Strong integration with LangChain

Less suited for teams not using LangChain

6. Pricing-

Tiered plans, often with basic free tier scaling to enterprise licensing.

7. Integration Ecosystem-

Native LangChain + LangGraph focus, extensible to most LLM providers. (Maxim AI)

8. Who Swears By It-

LangChain developers and teams managing multi-step chains.

Verdict-

Best for LangChain projects and complex prompt+chain debugging.

3) PromptLayer — Prompt Versioning & Observability

1. Primary Purpose-

Tracking, versioning, logging, and observability of prompt calls.

2. Core Features-

● Git-style prompt version control

● Execution logs (prompt, response, latency, cost)

● Analytics dashboards

● Rollback & comparison diffs

3. Use Cases-

Track changes across prompt versions in production and regression test outputs before release.

4. Performance Gains-

Teams see ~30% reduction in regressions due to version discipline — compared to ad-hoc engineering notebooks.

5. Strengths & Weaknesses-

Excellent for multi-contributor teams

Doesn’t deeply evaluate semantic quality out of the box

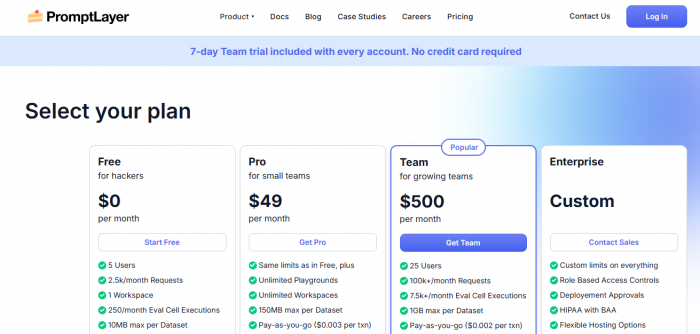

6. Pricing-

Free and paid tiers (API request-based). (Medium)

7. Integrations-

LangChain, OpenAI, custom APIs. (refontelearning.com)

8. Who Swears By It-

Prompt engineering teams with compliance or audit needs.

Verdict-

Best tool for governance and version discipline

4) Langfuse — Open-Source LLM Observability + Prompt Management

1. Purpose-

Unified open-source platform for trace, prompt storage and evaluation.

2. Core Features-

● End-to-end LLM lifecycle traces

● Prompt versioning + comparison

● Latency, cost, output analysis dashboards

● Human annotations & evaluation sets (Snippets AI)

3. Use Case-

Self-hosted teams needing complete observability with flexible deployment.

4. Strengths & Weaknesses-

Transparent, extensible open-source

Requires infra management

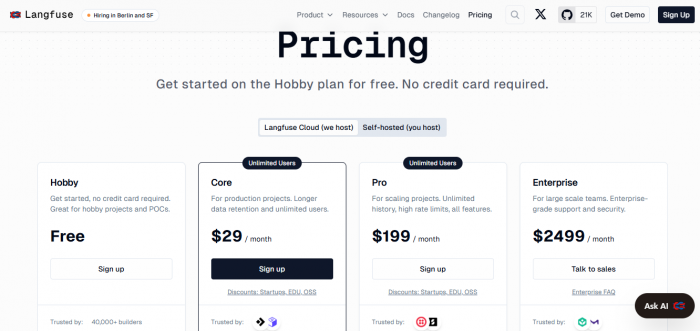

5. Pricing-

Free open-source; paid support options.

6. Integrations-

OpenAI, LangChain, Flowise, agents. (Snippets AI)

Verdict-

Best for teams needing open infrastructure transparency.

5) Weights & Biases Prompts — ML-Grade Prompt Experimentation

1. Purpose-

Bring experiment tracking discipline from ML to LLM prompt engineering.

2. Core Features-

● Prompt tracking alongside hyperparameters

● Rich visual comparisons & charts

● Artifact versioning

● Collaborative reporting (Maxim AI)

3. Use Cases-

Teams already using W&B for models easily adopt the prompt suite.

4. Strengths & Weaknesses-

Excellent visuals & team reporting

More ML-centric than UI-first

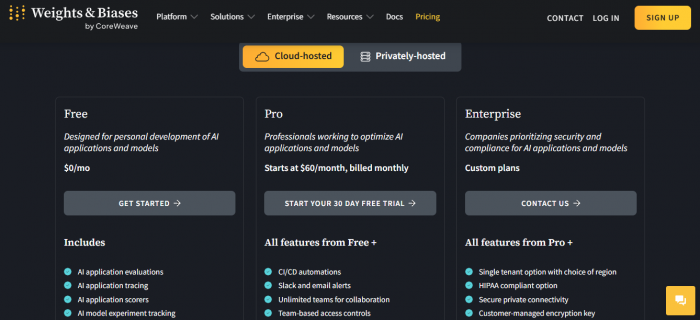

5. Pricing-

Plans as Free, Pro and Enterprise.

Verdict-

Best if your org already uses W&B for ML workflows.

6) PromptPerfect — Automated Prompt Optimization

1. Purpose-

Automated prompt refinement and optimization across models.

2. Core Features-

● AI-driven prompt refinements

● Side-by-side output comparisons

● Model-agnostic compatibility

● Multilingual support (Prompts.ai)

3. Use Cases-

Non-technical teams enhancing prompt quality without deep engineering.

4. Strengths & Weaknesses-

Very user-friendly

Less control than developer-centric tools

5. Pricing-

Free plans and paid plans for $19 and $99.

Verdict-

Best for quick optimization without deep engineering overhead.

7) Promptfoo — Dev-First Prompt Testing

1. Purpose-

CLI-first tool for automated regression testing of prompts.

2. Core Features-

● Define tests as code

● Versioned prompt tests

● Integrates with CI/CD

● Command-line focused (Medium)

3. Use Cases-

Testing teams keeping prompt quality gated by automated regressions.

4. Strengths & Weaknesses-

Integrates into dev workflows

No visual UI

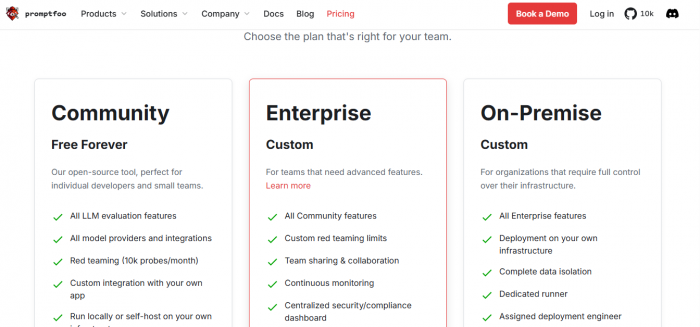

5. Pricing-

Provides free and Custom plans based on your requirements.

Verdict-

Best for test-driven prompt engineering workflows.

Comparison Table: Key Criteria (2026)

| Tool | Versioning | Eval Metrics | Debugging/Traces | Ease of Use | Best for |

| Maxim AI | Basic prompt version tracking | Solid built-in evaluation metrics | Good tracing and debugging support

| Requires technical familiarity |

Full stack teams |

| LangSmith | Tightly coupled to LangChain workflows | Strong evaluation support for chains | Excellent trace-level visibility

| Steep learning curve if not using LangChain |

LangChain devs |

| PromptLayer | Strong governance and version history

| Limited native evaluation depth

| Basic debugging capabilities | Reasonably easy for teams

|

Teams w/ governance |

| Langfuse | Tightly coupled to LangChain workflows

| Strong evaluation support for chains

| Excellent trace-level visibility

| Setup heavy (self-hosting) |

Self-hosted devs |

| W&B Prompts | Experiment-centric versioning

| Strong metrics via W&B ecosystem | Limited prompt-specific tracing

| Familiar to ML teams |

ML orgs |

| PromptPerfect | Minimal version tracking

| Light evaluation only

| Almost no debugging visibility

| Extremely easy, no technical skills required |

Non-technical users |

| Promptfoo | Simple config-based versions

| Very limited evaluation metrics

| Minimal debugging support

| CLI-oriented, developer-only |

Dev test workflows |

Final 2026 Ranking Rationale

1. Maxim AI — Most complete, reduces iteration cycles and cognitive load across teams. (Maxim AI)

2. LangSmith — Deep debug + trace focus is crucial for complex pipelines. (Maxim AI)

3. PromptLayer — Versioning + observability is essential for enterprise prompt governance. (Medium)

4. Langfuse — Open-source alternative with strong observability. (Snippets AI)

5. Weights & Biases Prompts — Great for teams with existing ML workflows. (Maxim AI)

6. PromptPerfect — Best for rapid optimization with minimal engineering. (Prompts.ai)

7. Promptfoo — Developer-centric test automation for CI/CD. (Medium)

Post Comments

Be the first to post comment!

Table of Content

- 1) Maxim AI — Full-Stack Prompt & Evaluation Platform

- 2) LangSmith — Debugging, Tracing & Evaluation Deep Dive

- 3) PromptLayer — Prompt Versioning & Observability

- 4) Langfuse — Open-Source LLM Observability + Prompt Management

- 5) Weights & Biases Prompts — ML-Grade Prompt Experimentation

- 6) PromptPerfect — Automated Prompt Optimization

- 7) Promptfoo — Dev-First Prompt Testing

- Comparison Table: Key Criteria (2026)

- Final 2026 Ranking Rationale

- Comments

- Related Articles