LlamaIndex is an open‑source developer library that helps integrate large language models with external data. It provides utilities to ingest, structure, and index various data formats so that language models can retrieve and generate responses grounded in user content. By abstracting data connectors and query layers, LlamaIndex enables more effective retrieval‑augmented generation and custom AI workflows tailored to specific datasets.

How LlamaIndex Operates

LlamaIndex functions as a middleware layer that structures and indexes data for efficient LLM access. Developers link data sources such as documents, databases, or knowledge bases, and LlamaIndex constructs indexes that serve as lookup layers. These indexes feed relevant information into LLM prompts, enabling natural language queries or structured interactions with data-informed AI outputs.

Practical Applications

Industry Impact

LlamaIndex is applied in research, customer support automation, internal knowledge platforms, legal compliance search, and other sectors where unstructured data meets AI capabilities.

Key Considerations

Connects LLMs to external data sources efficiently

Simplifies retrieval-augmented generation (RAG) workflows

Supports structured, unstructured, and multi-modal data

Modular and flexible for custom AI pipelines

Open-source with growing community support

Works well for building knowledge-base and document Q&A applications

Updates may introduce breaking changes

Less polished UI; mostly backend-focused

Limited ready-made templates compared to full frameworks

Advanced integrations require coding knowledge

Performance depends on data quality and indexing strategy

Features

Features

Features

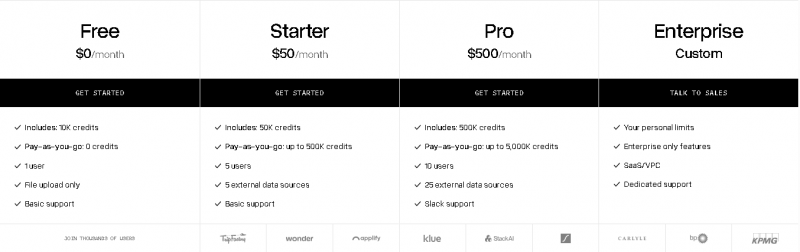

*Price last updated on Jan 9, 2026. Visit llamaindex.ai's pricing page for the latest pricing.